🚀 Scalability in System Design

Building Systems That Grow With You

What is Scalability?

Scalability refers to a system’s ability to handle increased load—whether it's growing user traffic, expanding datasets, more API requests, or a broader geographic footprint—without degrading performance, reliability, or cost-efficiency. A scalable system grows gracefully, not painfully.

Think of scalability like city planning. A well-designed city can handle population growth by expanding housing, roads, and infrastructure. A poorly planned city becomes congested and chaotic. Similarly, scalable systems are built to support growth without re-architecture or downtime.

In this post, you'll learn:

What scalability really means in modern system design

Core techniques like load balancing, caching, sharding, and asynchronous processing

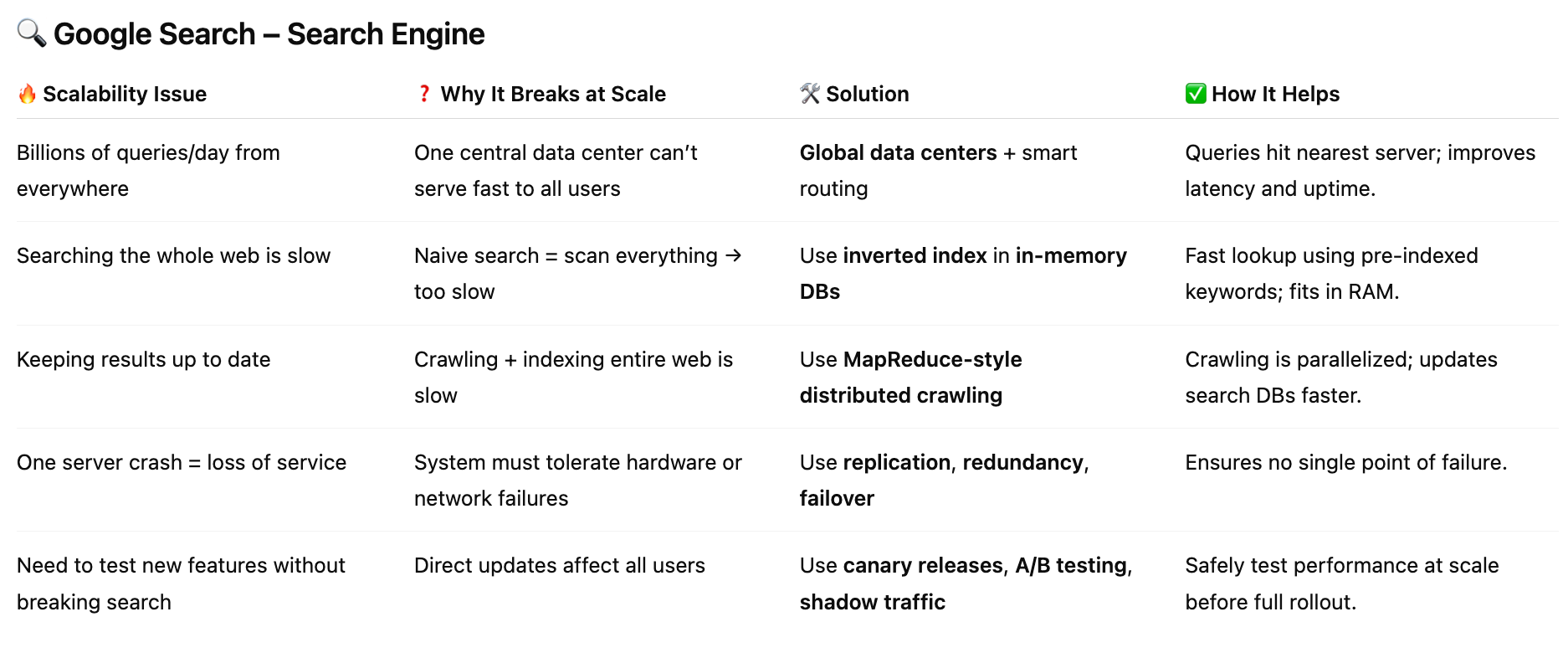

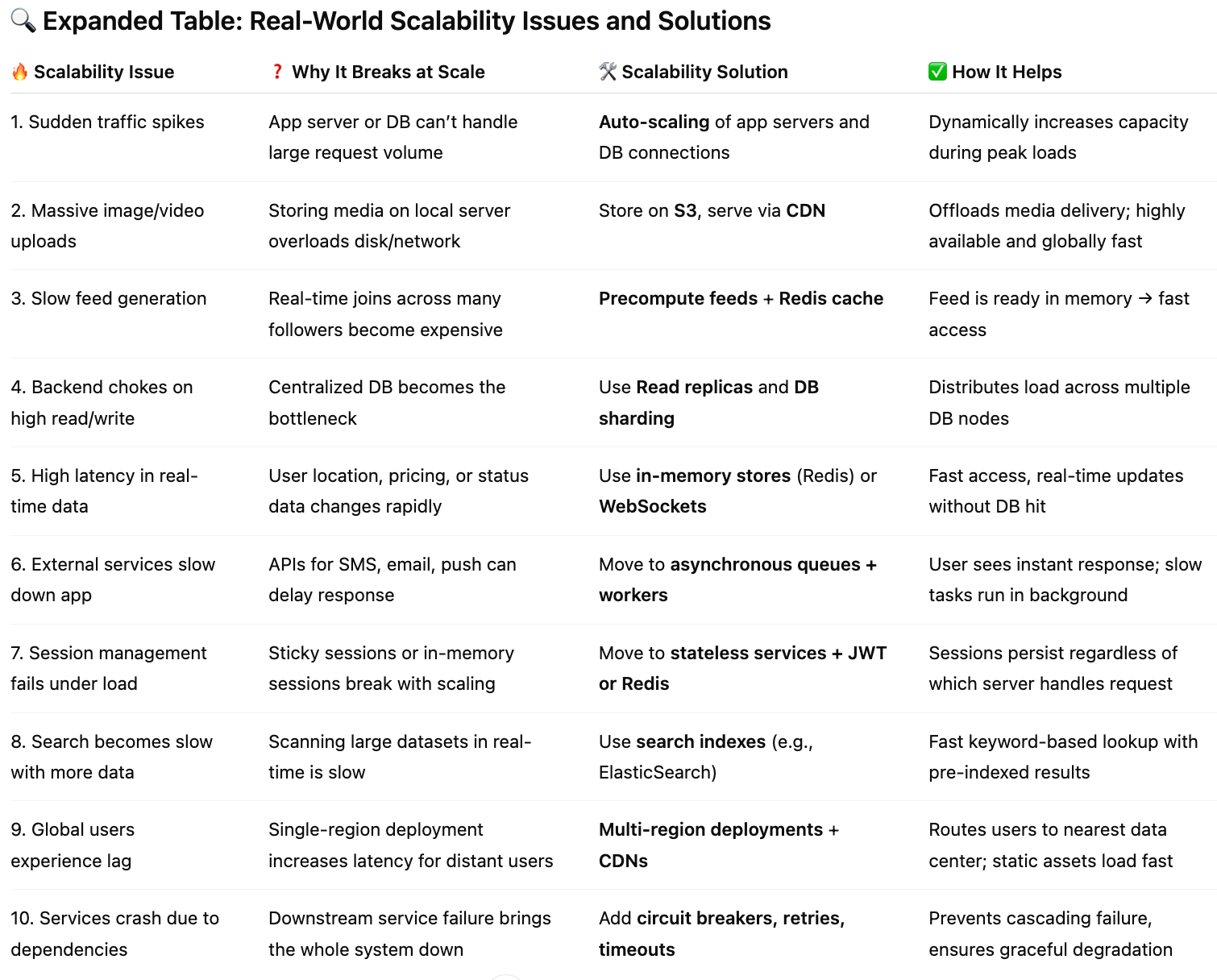

How real-world tech giants like Netflix, Instagram, Uber, Amazon, and Google solve massive scalability challenges

A clear, practical table of problems → solutions → design patterns

Whether you're building a side project or scaling a billion-user platform, understanding these principles will help you design systems that stay fast, stable, and cost-effective under pressure.

Why is Scalability Important?

Unpredictable Growth: Your product might go viral tomorrow.

Global Reach: Users from different regions need quick, reliable access.

Cost Efficiency: You only want to scale when needed.

Performance Consistency: More users shouldn't slow things down.

System Reliability: Failures in one part shouldn't crash everything.

Types of Scalability

Vertical Scaling (Scale-Up):

You add more resources (CPU, RAM, SSD) to an existing server. It's simple but limited—you can’t upgrade forever, and it’s often expensive.Horizontal Scaling (Scale-Out):

You add more servers or instances. Requests are spread across multiple machines. This is how most large-scale systems scale. It’s more complex but has virtually no upper limit.Diagonal Scaling:

A combination of both—start with vertical scaling and shift to horizontal when needed.

Key Components of a Scalable Architecture

Load Balancers: Distribute incoming traffic evenly across servers to avoid overload.

Caching: Store frequently accessed data in memory (like Redis or Memcached) to reduce database load.

Database Sharding & Partitioning: Split large databases into smaller chunks (shards) for faster access and writes.

Asynchronous Processing: Use message queues (e.g., Kafka, SQS) to decouple tasks and prevent bottlenecks.

Stateless Services: Design components to not depend on local state, so they can scale independently.

Auto-Scaling: Cloud platforms like AWS, GCP, and Azure automatically adjust resources based on usage.

CDNs (Content Delivery Networks): Serve static content (images, videos) from locations closer to the user, improving speed and reducing backend load.

Design Principles for Scalability

Design for failure: Expect services to go down and ensure the system degrades gracefully.

Loose coupling: Ensure components interact through well-defined APIs so each part can scale independently.

Idempotency: Repeated requests shouldn’t have unintended side effects.

Observability: Use metrics, logs, and traces to monitor and react to scaling issues.

🔧 Practical Examples

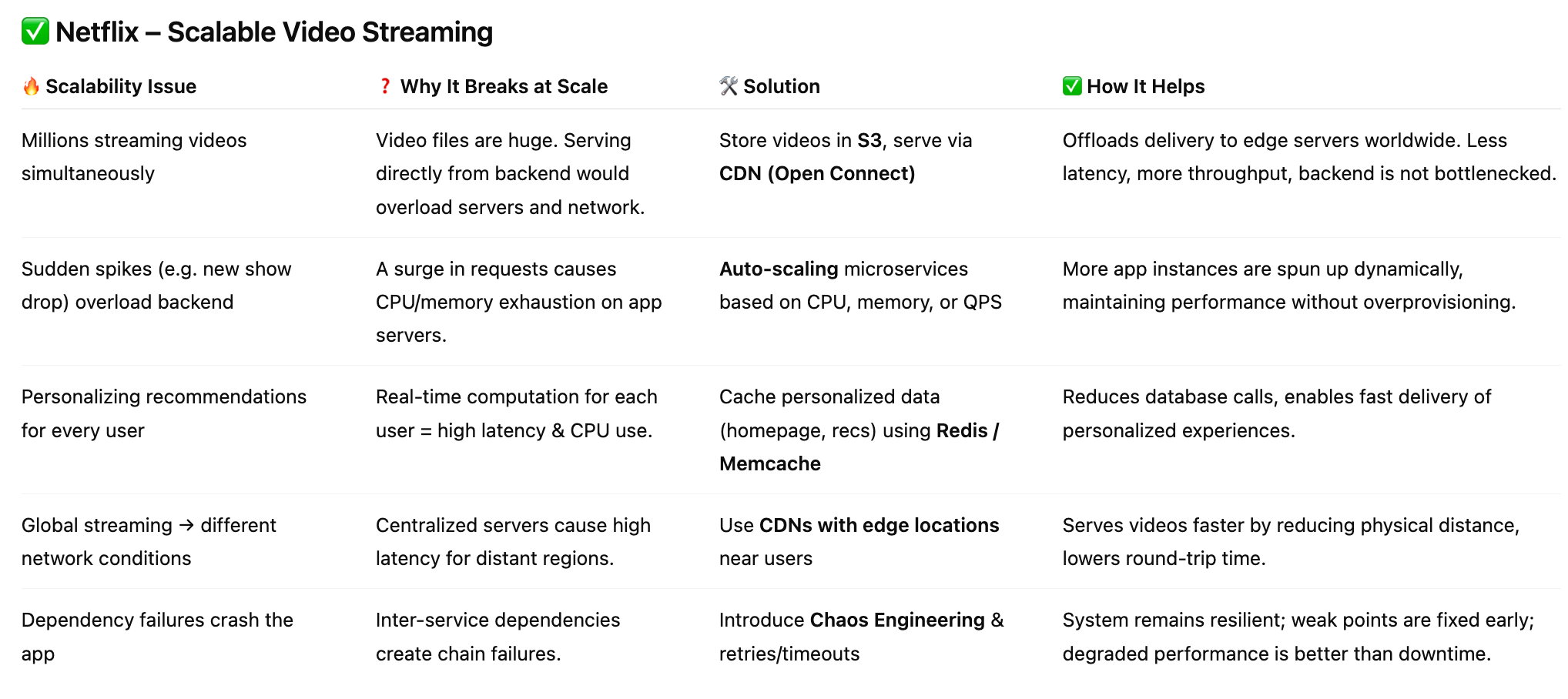

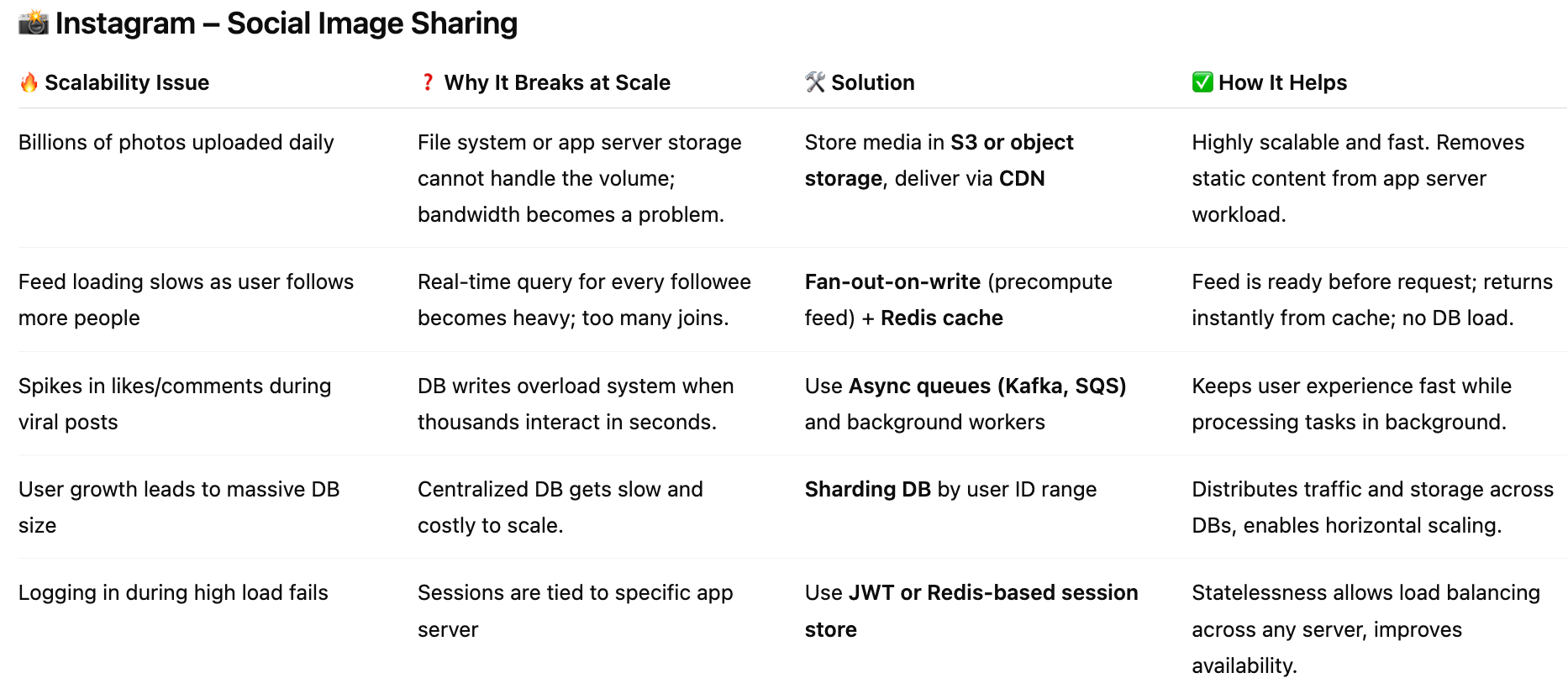

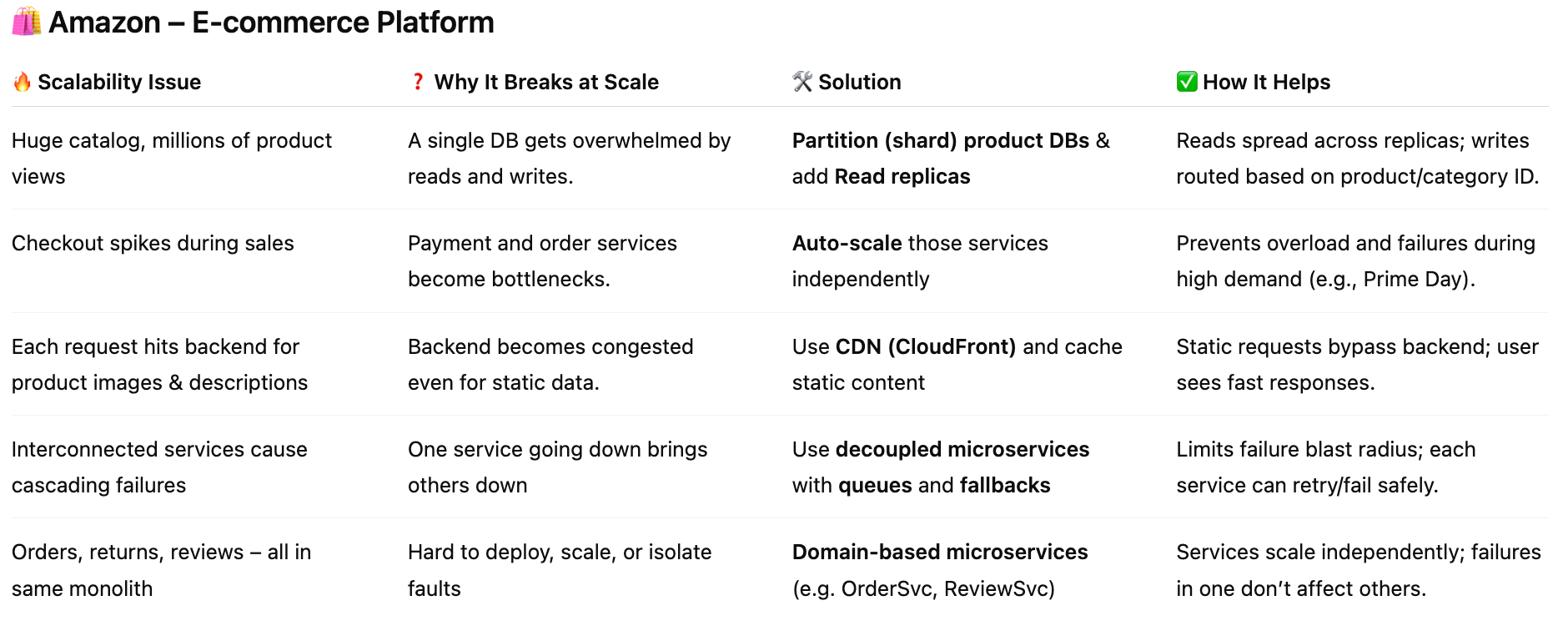

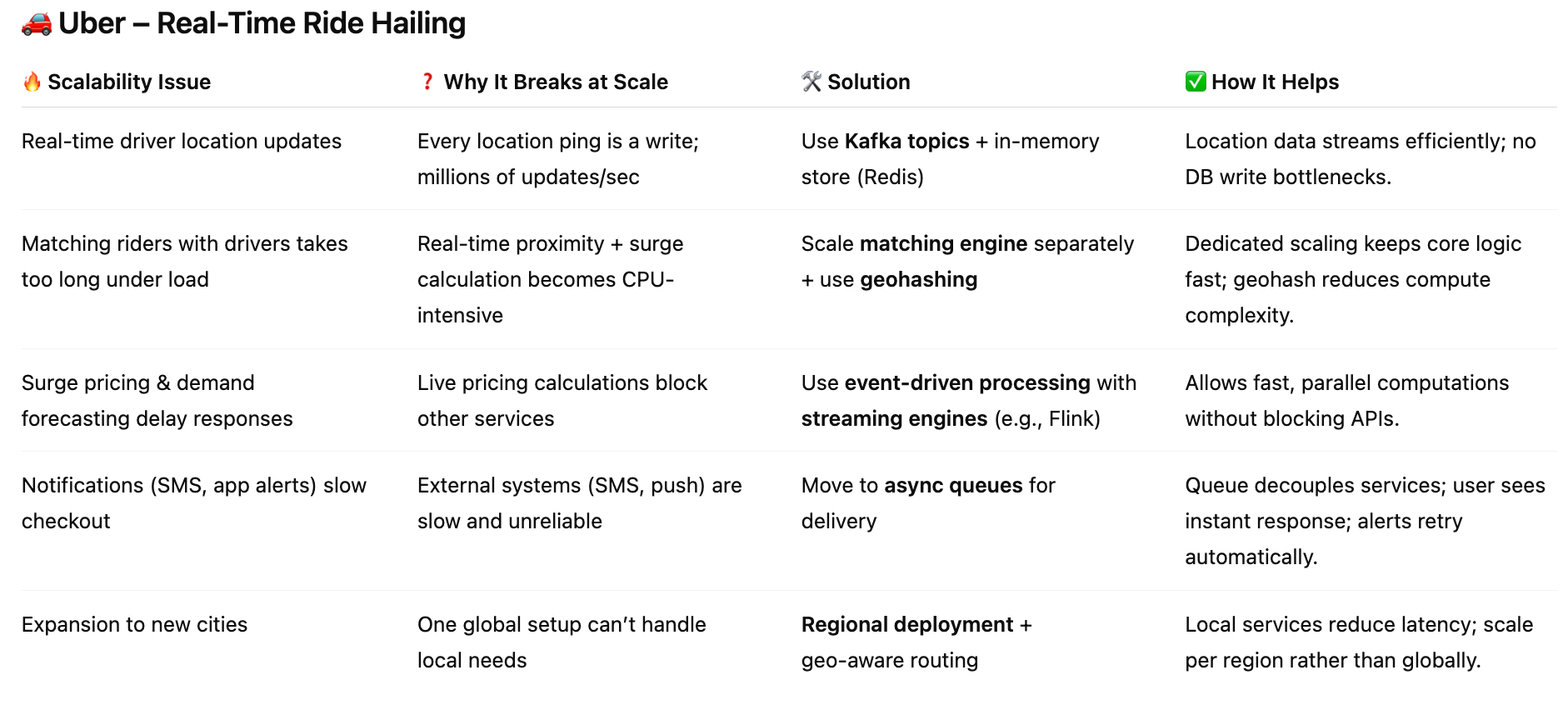

The below table highlights what solution can be taken address any kind of scalability issues.

Scalability isn’t just a technical checkbox. It’s a philosophy that starts at the design stage and continues with testing, monitoring, and adapting. From cloud services and databases to queues and caches, scalable systems rely on thoughtful architecture, not brute force.

Whether you're building a side project or an enterprise product, designing for scale ensures your system can grow with your users—without grinding to a halt.